By Felissa Mariz D. Marasigan and Lovely Jovellee Lyn Bruiz

2020 brought a major change in the digital world. As we bring our day-to-day activities online, our social network grows vastly, and new more diverse threat starts creeping in.

In 2017, DeepFakes, also known as manipulated media produced through artificial intelligence, were primarily used by enthusiasts to generate fake porn videos. It is a digital technology in which a person in an existing image or video is replaced with someone else’s likeness. Today, DeepFake applications and services are readily available for every individual to use. These Deepfake applications, enables its user to ‘star’ in famous movies and be a look-alike of well-known personalities. This makes a sudden growth in the number of manipulated media being released on the Internet every day. In fact, a study from Sensity, a threat intelligence focusing on visual threats, revealed that more than 118,200 DeepFake videos have been uploaded online as of January 2021[1].

It raises a concern as DeepFake’s potential to be abused is apparent now in our society. With this, comes along a more pressing issue to the cyberworld: DeepFake as the innovation that will further propel the success rate of phishing attacks.

The Rise of GAN: DeepFake’s Backbone

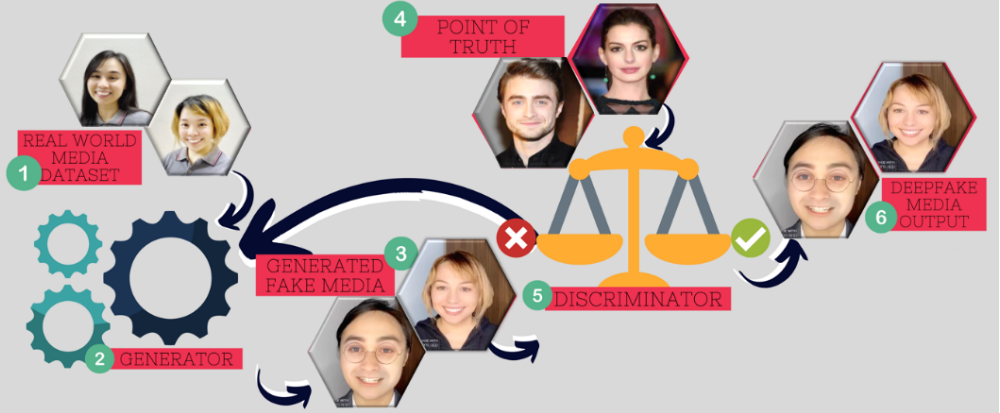

DeepFake media aims to create a parallel truth to deceive people and make every person have a hard time discerning what is real or not. But how are DeepFakes being created? GAN or Generative Adversarial Network, proposed by Ian Goodfellow and his colleagues in the year 2014, is the foundation of DeepFake. It has two major components namely: the generator and the discriminator. A generator is the ‘creator’ of fake, real-looking media, that studies its datasets in order to create output. It improves the quality of its output by interpreting feedbacks from the discriminator. A Discriminator is a ‘classifier’ that distinguished whether a generated media is ‘real’-enough or not. These are two sophisticated neural networks that makes DeepFake as threatening as It can be even at an early onset.

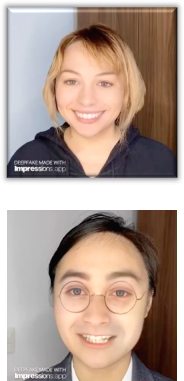

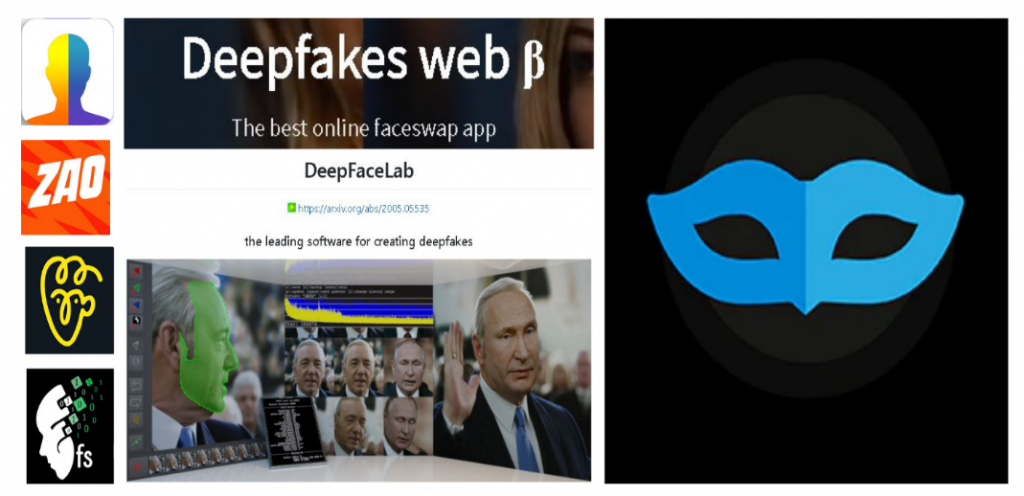

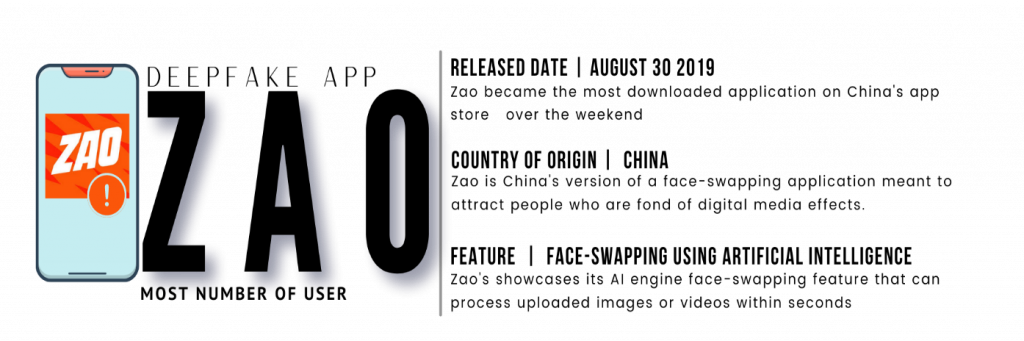

GAN as the primary algorithm in DeepFake generation, can be implemented across different platforms such as mobile, computers and web. For mobiles, several mobile applications, are released both for Android and iOS platform. Zao, FaceApp and Impressions are just some of the readily available applications for public use. However, with the limitation of the computing power of mobile devices, you can only expect as much with the quality of the product. Which is why Faceswap, a computer application is made available. This enables the user to have a more computing power as it is being deployed to a device such as a personal computer. However, more data sets are required, and technical knowledge is a necessity which makes it more complicated to use. This instance sparks the growth of DeepFake web services. These services offer the user the computing power they need minus the technical qualifications it entails.

Some tools and applications for creating DeepFake:

Impressions is the first app ever that allows you to make and share high-quality DeepFakes. It takes as fast as 5 minutes to help the user create their own media.

Faceswap is a free and open source DeepFake application. It is powered by Tensorflow, Keras, and Python, and can be used for learning and training purposes.

Deepfakes web β – is a web service which lets you create DeepFake videos on the web. It uses Deep learning to absorb the various complexities of face data. Deepfakes web β can take up to 4 hours to learn and train from video and images whereas it takes another 30 minutes to swap the faces using the trained model.

What makes DeepFake a threat?

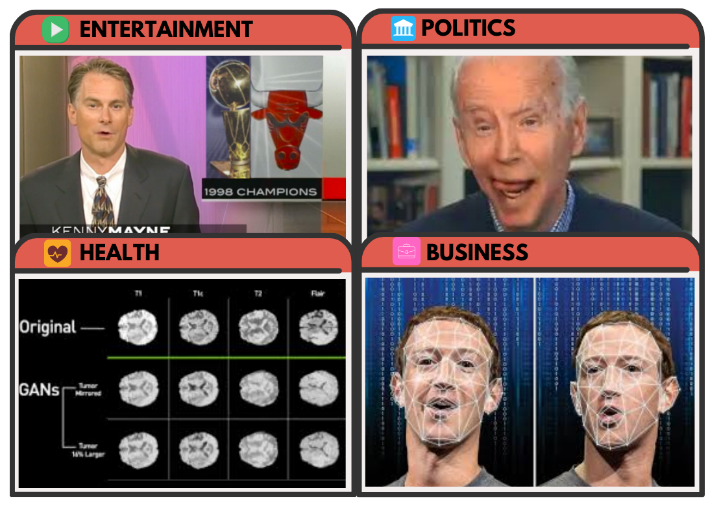

Traces of DeepFake is very evident now in different industries such as entertainment, politics, health and business; and its direct impact is identity theft.

Since the success of DeepFake heavily relies on the quality of its training data sets, therefore public figures are at most risk having several publicly available images and videos. What is dangerous about public figures being victims of identity theft is that they are well-known and influential, this makes their public statements and actions have so much bearing. Plots and schemes with evil intent using their stolen identity can influence industries.

It is disturbing to know that besides the fun that DeepFake brings, it comes with threats like identity theft. What deeper threats does it impose?

Bypass Security Feature

One of the threats that DeepFake impose sparked because of Zao. It is a DeepFake mobile application from China that boasts it capability to process images and videos in a precise and fast manner. However, Zao was criticized over its EULA which states that they have all the rights over all the uploaded media by its user. But there is more to it, since the user is required to upload different facial angles, it sparks security concerns as well. The gathered images and videos which may now serve as the dataset, together with Zao’s DeepFake technology, the risk to bypassing security feature such as facial recognition has become closer to reality.

How is it possible?

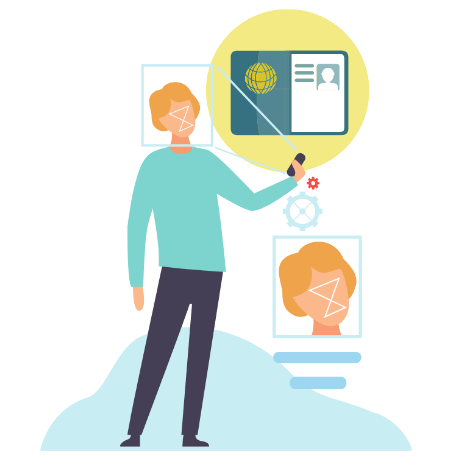

Imagine, a perp, who was able to acquire your personal information, is trying to apply for a bank account under your identity through digital transaction. In order to proceed with the application, the perp needs to prove his identity digitally. This is where facial recognition software with different layers to verify identities come into play.

One of the techniques used by facial recognition software is by requiring a valid ID which will now serve as its point of truth. To be able to verify that the person in the ID is the actual person applying, it will require a real-time expression check. In this instance, Deepfake’s capability to bypass security comes in. We may know that static DeepFake images are easy to produce, hence can be used to easily spoof static facial verification. How about real-time facial expression check? With Zao’s Deepfake technology, to imitate a person’s look, combined with its capability to mimic facial expression real time, a technique to deceive this layer of security is invented.

D-AA-S

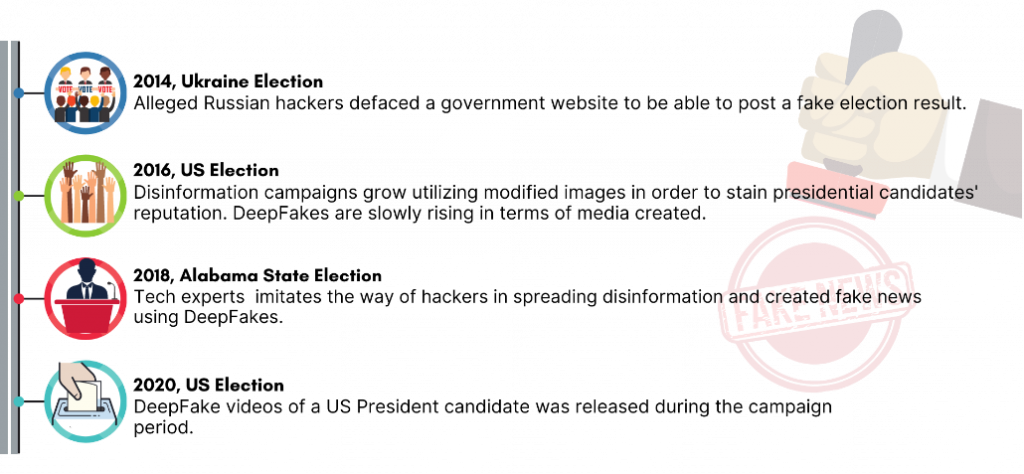

Next DeepFake threat on the list is D-AA-S, also known as Disinformation-as-a-service. It is a service that aims to spread false information to manipulate current situation biased to a certain party and is not something new. Like other deep web services such as R-AA-S, D-AA-S was offered in the dark web to the highest bidder. During the 2014 Ukraine Election, Internet Research Agency, commonly known as “Troll’s Factory”, first made its appearance by defacing a government website in which they a posted a fake election result with the media being posted a result of DeepFake technology. It created a great confusion between the citizens of Ukraine.

In the recently held US elections, a DeepFake video of one of the presidential candidates circulated the web. It is allegedly produced by a hired Russian D-AA-S provider by one of the rivals of the said candidate in order to discredit him. This led to divided citizens of the country, those who believed the DeepFake video and those who do not.

Previously, D-AA-S comes in form of fake news articles, fake documents and fake images. Today, as the people become highly dependent on the web, D-AA-S became more effective in misinforming people by means of creating realistic news in the form of DeepFake media circulating the internet.

As we evolve today and become more aware and educated with security concerns, the criminals will have to ‘up’ their game as well. Hence, we have identified the last major threat that DeepFake impose. Humans who serve as the weakest link in a security chain, are still the easy target of cyber criminals as the first Deepfake phishing scam happened last March 2019 was recorded.

Evolution in the Phishing Landscape

A CEO of a UK based energy firm was contacted by his alleged “boss”. In the length of their conversation, the CEO was instructed to transfer a large sum of money amounting to $243,000. Despite having the same voice, it was discovered that the “boss” whom the CEO had talked with is a different person. It was concluded that the voice at the other end of the line Is a crafted DeepFake audio mimicking the boss’s voice.

What happened to the CEO showcase the revolution of the gameplay in the phishing landscape. As people become more aware of phishing, cyber criminals need to advance their techniques. With the use of DeepFake, the stage that cyber criminals use in their phishing attacks improved in such a way that their deception become more successful.

For example, before cyber criminals pretend to be a third-party person in order to exfiltrate information from their targets. Now, they pretend to be someone affiliated with their target to gain access to personal information.

Knowing all these existing threats, what other dangers may possibly rise with the continuous innovation in Deepfake technology?

Future Deepfake Threats

The future threats that we can observe in the future may include the increase in number of click baits or digital advertisements visible in the web that showcases DeepFake generated media. Furthermore, a continuous growth in the number of cases involving identity theft can also be expected. Moreover, the concern that DeepFake can be used in distributing malware as it can be used as a new form of attack vector that may lead to granting a backdoor access to the victim’s machine.

Lastly is Ransomfake. It is a ransomware made more effective with the use of DeepFake. As ransom stealers grow in number, the possibility of a ransomware that steals personal media files in the victim’s machine may start to appear. These stolen files may be used to create DeepFake media that the attacker may use to blackmail the victim to force him/her into paying.

With our new situation, less face-to-face encounter is the ‘new norm’ in living our daily lives. As we shifted to the digital environment, malicious intents that utilizes DeepFake such as bypass security features, misinformation, and an evolved phishing landscape, poses more risk to people in falling to be victims even more. This new ‘crease’ in cybersecurity challenges us, to understand what DeepFake is and what can be done to prevent this from being exploited. Preparing oneself in order to avoid being a victim should be always observed.

Being informed with the different cyberthreats is a good start to avoid being a victim. Since we know that manipulated media are circulating the internet, we must pay attention to details. Always check for inconsistencies in the things around you. Always stay vigilant and be aware of strange things that we see as we stay away from suspicious post.

Fake information is purposely disseminated to the public. Taking your time to pause, check and verify the things you see in the web is a good practice to not be easily deceived. In today’s world, data is the new gold. Think before sharing.

Lastly, as the growth of damaging DeepFake media continues to rise, well-known companies in the cyberworld initiates a fight against it. Pioneered by Google, followed by Facebook’s DeepFake Detection Challenge and recently joined by Microsoft Detection tool, detecting DeepFake and slowing down its growth has been made possible.

Knowing the ways on how to be safe and not be a victim of cyber criminals will not stop a good technology from being exploited, but it will lessen the damage it brings to every individual.

Reference:

Comments are closed.